In my previous article about k-Nearest Neighbor, I have shown you how I see this algorithm. However, it was terribly slow: my computer was calculating it for full 3 days. Some research shown that NumPy is the way to go here.

I know that NumPy is used a lot in Machine Learning, so that is definitely a no-brainer that I took it. Follow me and I show you how I done it!

Benchmark

While performance is clearly an issue, I believe you will agree with me that we need to be more specific here. We need some benchmark.

I decided not to be very fancy with it. Since testing on many k‘s takes a long time, a logical solution is to use only one k. So, first constraint is k=20.

After trying to run it, I waited about 10 minutes till I realized that I am not waiting that long. 😀

My intuition instantly told me to reduce the training set. So I did! I reduced training set from 50000 to 10000 examples.

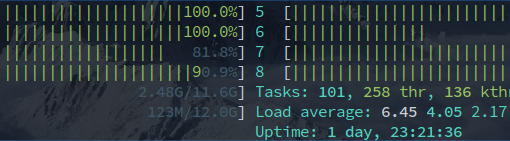

And my code still took 972.7 seconds to run. Oh well, time to improve it!

Our task

Let’s take same task from previous part. Table below is our data set.

Our goal is to classify (8, 5).

| Point | Label |

| (1, 2) | A |

| (3, 5) | A |

| (12, 5) | B |

| (-1, 8) | B |

Vectorizing distance calculation

Computers are getting better at performing operations with vectors and matrices. Modern processors evolved to support SIMD that boosts vector operations in lowest level. That is something that we need to do!

Maths behind it

$$ \sqrt{ { { \sum_{i=1}^n ( a_i – b_i )^2 } } } $$

Currently, we are performing calculations one-by-one. That means, as soon as we approach a point, we calculate full distance: take the difference, square that, add, repeat for all dimensions and take square root. And then we repeat for all points.

But what happens if we take a little bit different approach? Instead of performing that one-by-one calculations, lets do that in bunches! Check this out!

First of all, let’s calculate \( a_i – b_i \) for all points. Then square all of them respectively. After that take the sums of each point. At least, take a square root of each sum.

This may seem like a harder point of view, but it is basic linear algebra – vectors and matrices. If you are unfamiliar with matrices, imagine them as two-dimensional array. One row represents one point. Then, we have another matrix that consists of our target point. We just repeat that point as many times as there are training examples. And, in the end, we take difference of it.

$$ \begin{bmatrix} 1 & 2 \\ 3 & 5 \\ 12 & 5 \\ -1 & 8 \end{bmatrix} — \ \begin{bmatrix} 8 & 5 \\ 8 & 5 \\ 8 & 5 \\ 8 & 5 \end{bmatrix} = \ \begin{bmatrix} 1 – 8 & 2 – 5 \\ 3 – 8 & 5 – 8 \\ 12 – 8 & 5 – 5 \\ -1 – 8 & 8 – 5 \end{bmatrix} = \ \begin{bmatrix} -7 & -3 \\ -5 & 0 \\ 4 & 0 \\ -9 & 3 \end{bmatrix} $$

Our result is \( a_i – b_i \) for every point. I hope you see what this is all about! 🙂 Then, we have to square every element and add them to get vector with distances squared.

$$ \begin{bmatrix} (-7)^2 & (-3)^2 \\ (-5)^2 & 0^2 \\ 4^2 & 0^2 \\ (-9)^2 & 3^2 \end{bmatrix} = \ \begin{bmatrix} 49 & 9 \\ 25 & 0 \\ 16 & 0 \\ 81 & 9 \end{bmatrix} $$

$$ \ \begin{bmatrix} 49 + 9 \\ 25 + 0 \\ 16 + 0 \\ 81 + 9 \end{bmatrix} = \ \begin{bmatrix} 58 \\ 25 \\ 16 \\ 90 \end{bmatrix} $$

And, at last, lets take square root of each element to get distances.

$$ \begin{bmatrix} \sqrt{58} \\ \sqrt{25} \\ \sqrt{16} \\ \sqrt{90} \end{bmatrix} \approx \begin{bmatrix} 7.616 \\ 5 \\ 4 \\ 9.487 \end{bmatrix} $$

If you compare this with distances in previous post, then you will see that we got same values!

Implementing vectorized operations with NumPy

But actually, since we do not care about actual distance, we care about k closest items, we can dismiss square rooting to save some resources.

In Python, using NumPy, it looks like this:

class KNearestNeighbor(object):

# ....

def predict(self, features):

target = np.repeat([features], len(self._training_data), axis=0)

dists = np.sum(np.square(self._training_data - target), axis=1)

indices = dists.argsort()[:self._k]

labels = map(lambda x: {'label': self._training_labels[x]}, indices)

return self._choose_majority(labels)

And we got down to stunning 404.2 seconds! That is some good improvement!

Picking distance

What we can do now is use some more magical powder of NumPy and statistics.

There is a special data structure called frequency table. Concept is pretty simple. Frequency table describes how often each value is found in the data. And, at last, we pick k the most frequent ones.

Check this out:

class KNearestNeighbor(object):

# ....

def predict(self, features):

# ....

# We don't actually need to store labels in dictionary

labels = map(lambda x: self._training_labels[x], indices)

return np.bincount(labels).argmax()

Isn’t that neat? 🙂 And this small this bumps us down to 394.4 seconds!

Conclusion

That was some crazy performance increase! I wonder how fast this will be when calculations will be sent to GPU. 🙂

When performing this work, I definitely started seeing value that mathematics provide in my discipline. I feel grateful for sharpening my linear algebra skills. 😛

How you like that? Do you have similar experiences with vectorization? Let me know!